Training Confidence Calibrated Classifiers For Detecting Out Of Distribution Samples

By design discriminatively trained neural network classifiers produce reliable predictions only for in distribution samples. Let an input x xand a label y y 1 k.

Training Confidence Calibrated Classifiers For Detecting Out Of

Training Confidence Calibrated Classifiers For Detecting Out Of

Training confidence calibrated classifiers for detecting out of distribution samples.

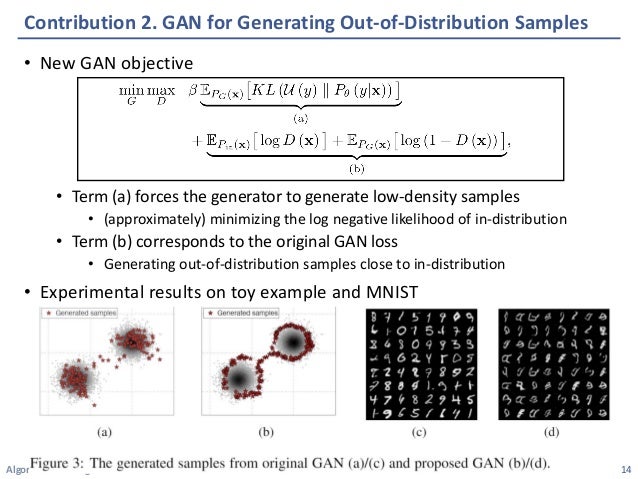

Training confidence calibrated classifiers for detecting out of distribution samples. The first one forces samples from out of distribution less confident by the classifier and the second one is for implicitly generating most effective training samples for the first one. The problem of detecting whether a test sample is from in distribution i e training distribution by a classifier or out of distribution sufficiently different from it arises in many real world machine learning applications. Python3 main py h.

Detect whether a test sample is from in distribution i e training distribution by a classifier formally it can be formulated as a binary classification problem. However the state of art deep neural networks are known to be highly overconfident in their predictions i e do not distinguish in and out of distributions. For their real world deployments detecting out of distribution ood samples is essential.

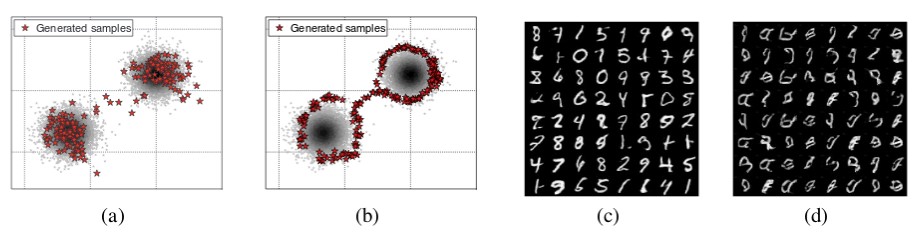

Training confidence calibrated classifier for detecting out of distribution samples. The confidence loss requires training samples from out of distribution which are often hard to sample. The problem of detecting whether a test sample is from in distribution i e training distribution by a classifier or out of distribution sufficiently different from it arises in many real world machine learning applications.

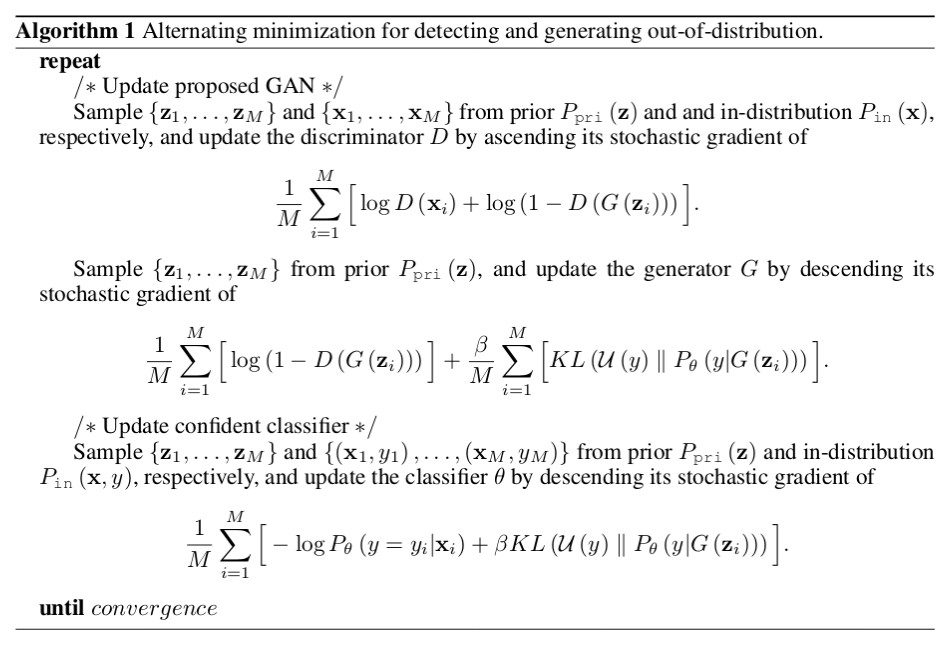

This project is for the paper training confidence calibrated classifier for detecting out of distribution samples. The first one forces samples from out of distribution less confident by the classifier and the second one is for implicitly generating most effective training samples for the first one. In essence our method jointly trains both classification and generative neural networks for out of distribution.

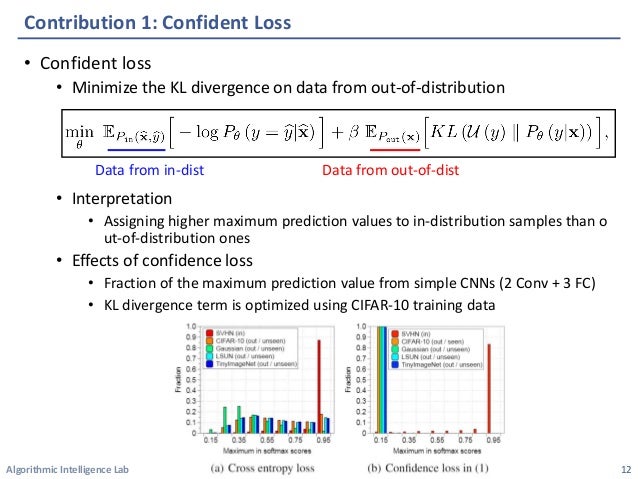

Published as a conference paper at iclr 2018 training confidence calibrated classifiers for detecting out of distribution samples kimin lee honglak leex. The overconfidence issue of dnns is highly related to the problem of detecting out of distribution. Ykibok lee jinwoo shin korea advanced institute of science and technology daejeon korea yuniversity of michigan ann arbor mi 48109 xgoogle brain mountain view ca 94043 abstract the problem of detecting whether a test sample is from in.

Some codes are from odin pytorch. That is the detection accuracy for the cross entropy loss red bar when cifar 10 is used as the in distribution data and svhn as the out of distribution data. To handle this issue we propose a joint training scheme for the confident classifier and the proposed gan which generates boundary samples in the low density area of in distribution i e close to out of distribution.

Various hyperparameters can be set prior to training to see which run.

Training Confidence Calibrated Classifiers For Detecting Out Of

Training Confidence Calibrated Classifiers For Detecting Out Of

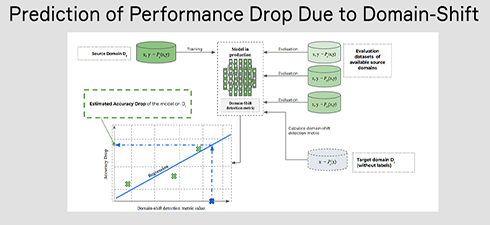

Predicting When Machine Learning Models Fail In Production Naver

Predicting When Machine Learning Models Fail In Production Naver

Training Confidence Calibrated Classifiers For Detecting Out Of

Training Confidence Calibrated Classifiers For Detecting Out Of

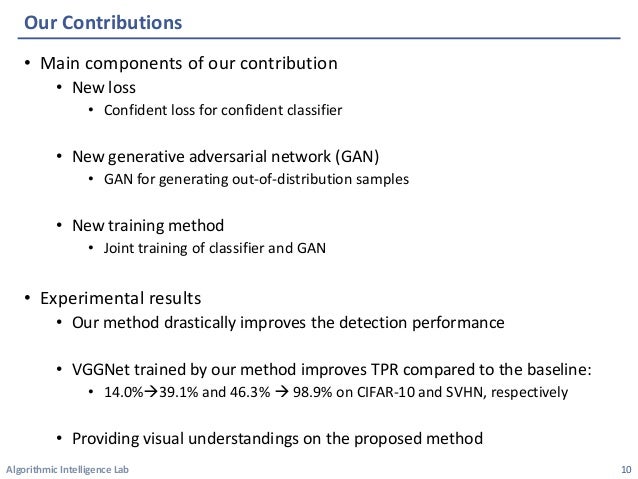

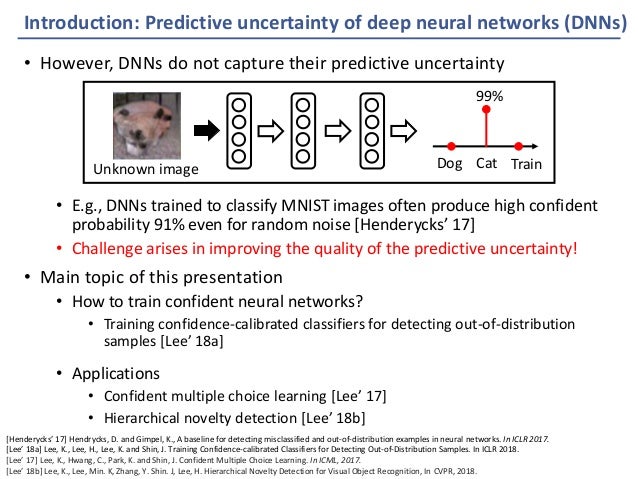

Predictive Uncertainty Of Deep Models And Its Applications

Predictive Uncertainty Of Deep Models And Its Applications

Training Confidence Calibrated Classifiers For Detecting Out Of

Training Confidence Calibrated Classifiers For Detecting Out Of

Predictive Uncertainty Of Deep Models And Its Applications

Predictive Uncertainty Of Deep Models And Its Applications

Pdf A Simple Unified Framework For Detecting Out Of Distribution

Pdf A Simple Unified Framework For Detecting Out Of Distribution

Github Alinlab Confident Classifier Training Confidence

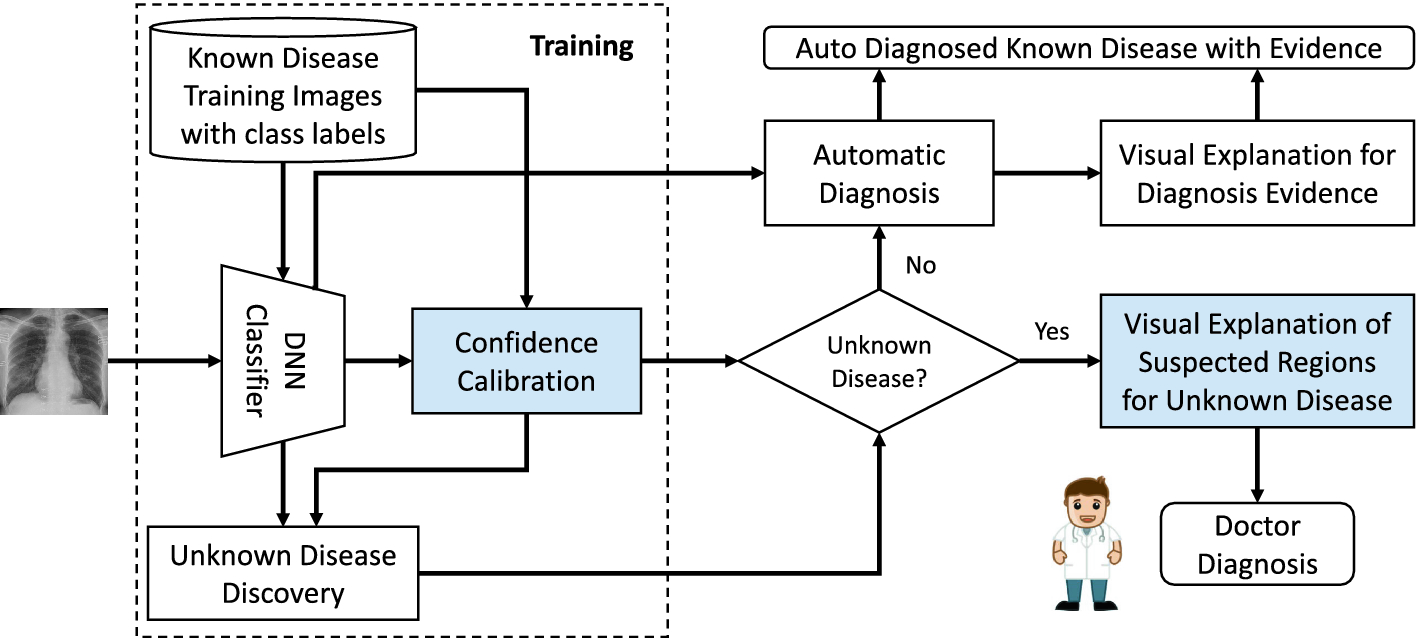

Xai Open Software Project Training C C Classifier For Detecting

Xai Open Software Project Training C C Classifier For Detecting

Pdf Training Confidence Calibrated Classifiers For Detecting Out

Pdf Training Confidence Calibrated Classifiers For Detecting Out

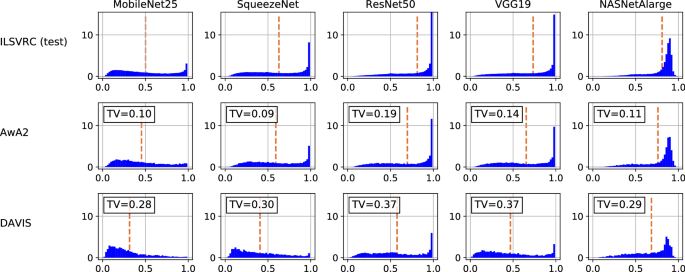

Prediction Calibration Techniques To Optimize Performance Of

Https Openaccess Thecvf Com Content Cvprw 2020 Papers W1 Ding Revisiting The Evaluation Of Uncertainty Estimation And Its Application To Cvprw 2020 Paper Pdf

Https Papers Nips Cc Paper 7798 To Trust Or Not To Trust A Classifier Pdf

Training Confidence Calibrated Classifiers For Detecting Out Of

Training Confidence Calibrated Classifiers For Detecting Out Of

Predictive Uncertainty Of Deep Models And Its Applications

Predictive Uncertainty Of Deep Models And Its Applications

Predictive Uncertainty Of Deep Models And Its Applications

Predictive Uncertainty Of Deep Models And Its Applications

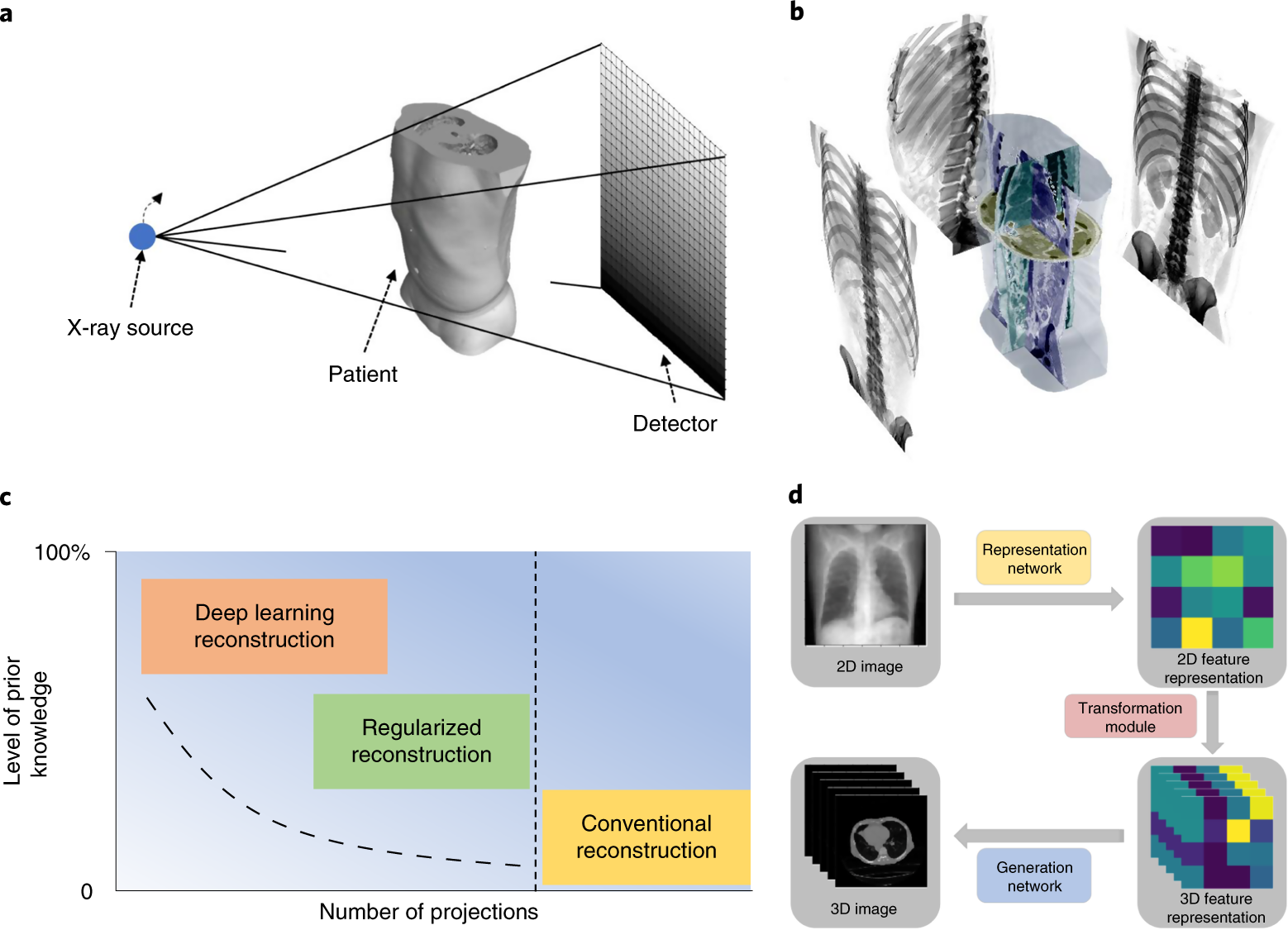

Patient Specific Reconstruction Of Volumetric Computed Tomography

Patient Specific Reconstruction Of Volumetric Computed Tomography

Ks Conf A Light Weight Test If A Multiclass Classifier Operates

Ks Conf A Light Weight Test If A Multiclass Classifier Operates

Https Papers Nips Cc Paper 7798 To Trust Or Not To Trust A Classifier Pdf

Training Confidence Calibrated Classifiers For Detecting Out Of

Training Confidence Calibrated Classifiers For Detecting Out Of

Https Arxiv Org Pdf 2002 03103

Pdf Detecting Out Of Distribution Traffic Signs Semantic Scholar

Pdf Detecting Out Of Distribution Traffic Signs Semantic Scholar

Pdf Training Confidence Calibrated Classifiers For Detecting Out

Pdf Training Confidence Calibrated Classifiers For Detecting Out

Pdf Training Confidence Calibrated Classifiers For Detecting Out

Pdf Training Confidence Calibrated Classifiers For Detecting Out

Https Openreview Net Pdf Id Ryiav2xaz

Http Aisociety Kr Kjmlw2019 Slides Bohyung Pdf

Http Bayesiandeeplearning Org 2018 Papers 44 Pdf

Https Openreview Net Pdf Id Ryiav2xaz

Training Confidence Calibrated Classifiers For Detecting Out Of

Training Confidence Calibrated Classifiers For Detecting Out Of

Pdf Robust Out Of Distribution Detection In Neural Networks

Pdf Robust Out Of Distribution Detection In Neural Networks

Http Bayesiandeeplearning Org 2018 Papers 44 Pdf

Training Confidence Calibrated Classifiers For Detecting Out Of

Training Confidence Calibrated Classifiers For Detecting Out Of

Https Arxiv Org Pdf 1809 03576

Predictive Uncertainty Of Deep Models And Its Applications

Predictive Uncertainty Of Deep Models And Its Applications

Https Openaccess Thecvf Com Content Cvpr 2020 Papers Hsu Generalized Odin Detecting Out Of Distribution Image Without Learning From Out Of Distribution Data Cvpr 2020 Paper Pdf

Pdf Detecting Out Of Distribution Traffic Signs Semantic Scholar

Pdf Detecting Out Of Distribution Traffic Signs Semantic Scholar

Training Confidence Calibrated Classifiers For Detecting Out Of

Training Confidence Calibrated Classifiers For Detecting Out Of

Posting Komentar

Posting Komentar